Concept & System

Current day mobile games often fall into one of two categories, those who want desktop level complexity and thus choose to fill the screen with onscreen buttons, or those that want a simplistic casual experience and thus sacrifice gameplay complexity.

This system adresses this issue and attempts to maintain gameplay compexity, even when controls are simplified to a single touch input.

The backbone of the system is a neural network which is constructed of in-game scenarios seperated into enemy classes. Meaning each class is evaluated diffently on a per-scenario basis.

By continually learning from how the player fights, the system will pick up on specific behavior such as:

- "The player doesn't want to attack melee enemies when their health is low"

- "The player wants to target a ranged enemy if it is being buffed".

We can pick up on traits that are specific to each indivual player when they show clear intentional play. In any given scenario, if the player keeps attacking a certain enemy, thus showing intention, the system will alter the values of each scenario in the neural network to make this enemy more likely to be targeted again in a similar scenario.

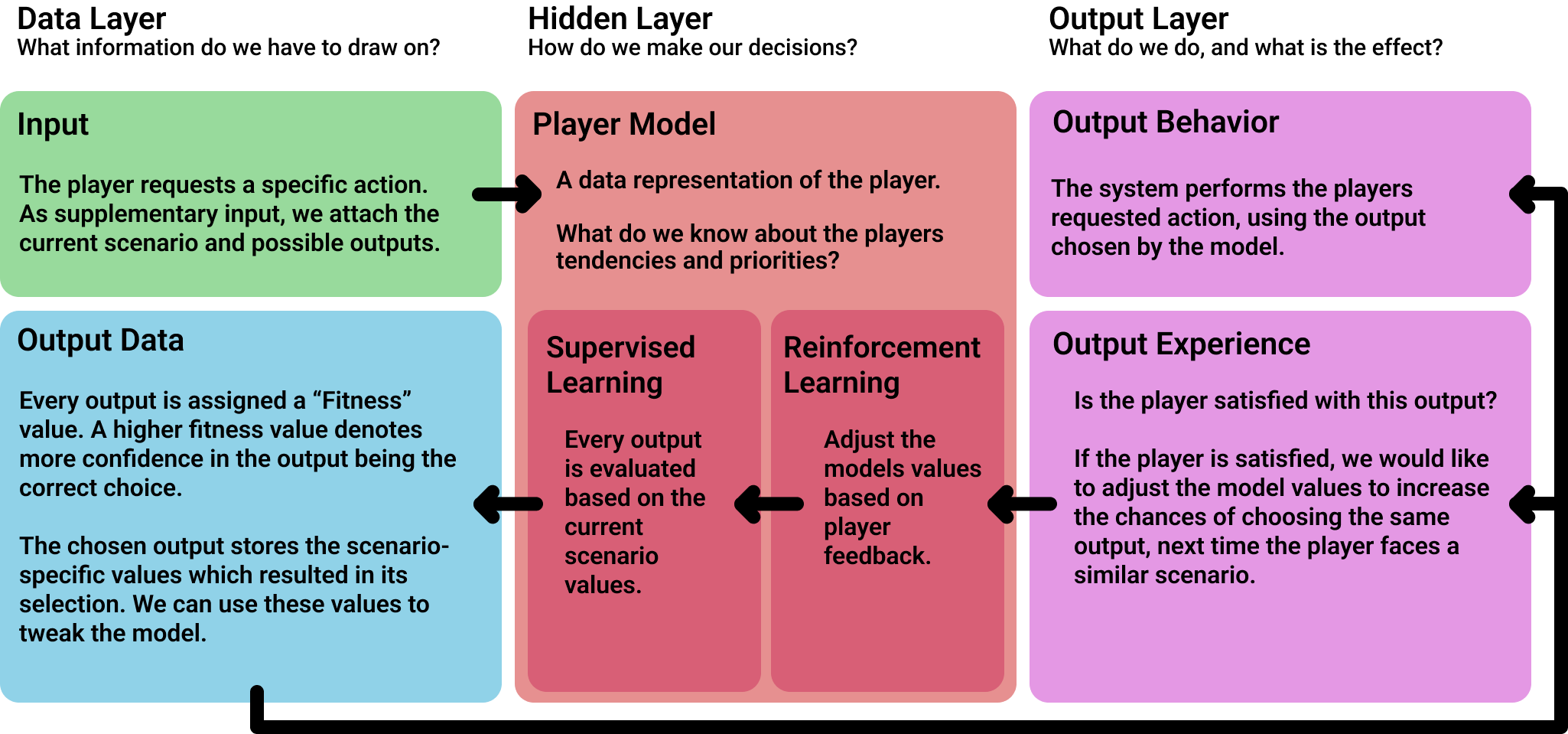

System Overview

Interesting Decisions

For the players actions to be intentional and meaningful, we need to give the player meaningful decisions to make. In my playtests three enemies each occupy their own specific role in combat. In addition to the different enemy types, other hazards, obstacles, weapons and pickups exist to further complicate the decision making and putting further embhasis on the use of the system.

Original designs by Yuting Chen

With all of these different affordances having the same single input, by design, the systems ability to correctly predict player behavior becomes essential to the experience.

The ultimate goal of this system is to remove friction between player and game. By creating a more seamless experience, casual mobile players are free to enjoy the relatively limited time in a game-session as compared to similar desktop games.

Procedural Generation

For the playtest, I've created an infinately varied playspace for the players to meet every possible combat scenario presented by the combination of arena, enemies and hazards. The level is randomized, not only in terms of layout, but also how and where enemies spawn. Some rooms might have a steady flow of enemies, while other rooms lure you in with a pickup and then surround you with enemies.

The secondary purpose of using procedural generation is to eliminate the effects of steadily increasing difficulty and the players increace in skill on the playtest data.

(Hover over the visual to discover the correlations between each datapoint)

Code Insight

A function from the Player targeting script

The player compiles a list of all targets within reach. With this list, alongside information about the state of battle (total enemies left) and its own state (Health, armor, damage taken/dealt), it queries a target from the Machine learning supervisor.

1public AttackTarget GetTarget()

2 {

3

4 //Get current state of combat from the Arena Manager

5 CombatState combatState = new CombatState();

6 if (currentArena != null)

7 {

8 combatState = currentArena.combatState;

9 }

10

11 //Query the Machine Learning Supervisor for best Attack given current scenario.

12 //Attached list of targets, playerstate and combatstate

13 AttackTarget target = MachineLearningSupervisor.Instance.RequestTarget(targets, myState, combatState);

14 Debug.DrawLine(transform.position, target.transform.position, Color.green,5f);

15

16

17 //Start Attack Sequence

18 StartAttackSequence(target);

19

20

21 return target;

22 }

A function from the Machine Learning Supervisor

The supervisor takes into consideration all of this information and loops over and adds up each value, using the EvaluateTarget function. After selecting, a target is sent back to the player and the supervisor stores the selection for later feedback and reinforcement learning.

1public AttackTarget RequestTarget(List<AttackTarget> targets, CharacterState playerState, CombatState combatState)

2 {

3 if (targets.Count == 0)

4 return null;

5

6 //If only one enemy is within reach, choose imediately.

7 if(targets.Count == 1)

8 {

9 targets[0].EvaluateTarget(playerState, combatState);

10 lastScenario = targets[0].RequestScenarioValues();

11 lastTarget = targets[0].targetFeatureSet;

12 return targets[0];

13 }

14

15 //Loop over each enemy to determine the most likely target of the player

16 float targetValue = 0;

17 float currentValue = 0;

18 AttackTarget currentTarget = null;

19 foreach (AttackTarget target in targets)

20 {

21 if (target != bannedTarget)

22 {

23 currentValue = target.EvaluateTarget(playerState, combatState);

24 Debug.Log(target.name + ": " + currentValue);

25

26

27 Color visColor = Color.Lerp(Color.red, Color.green, currentValue / 2);

28 target.VisualizeValue(visColor);

29

30 if (currentValue > targetValue)

31 {

32 currentTarget = target;

33 targetValue = currentValue;

34 bannedTarget = target;

35 }

36 }

37

38 }

39

40

41 lastScenario = currentTarget.RequestScenarioValues();

42 lastTarget = currentTarget.targetFeatureSet;

43

44 //the Target itself should return the features it finds has the most impact on the final evaluation value

45

46

47 return currentTarget;

48 }

(Hover over the visual to see examples of how the model is used in different cases)